Trustworthy AI by Design

AI is no longer a question of if, but how.

Across industries, teams are racing to integrate AI into products, services, and operations. Some move fast and take risks without understanding the consequences. Others hesitate, uncertain of where to begin or how to manage accountability. But there is no turning back: the challenge now is to ensure AI is adopted where it’s needed and how it’s needed, and a big part of that “how” depends on how trustworthy the AI is.

We wrote this research because, in every conversation with AI leaders, product creators, and decision makers, we kept hearing the same frustration:

“We know trust matters. We just don’t know how to measure it or build it into our products.”

That is where operationalizing trust comes in. Turning trust from an abstract principle into something measurable, repeatable, and built directly into the AI systems we design and deploy. It is the bridge between intention and execution, helping creators, CPOs, CTOs, and AI directors translate their AI strategies into actual product reality.

In our discussions, one pattern was clear: when AI operates as a black box, trust breaks. Systems that offer recommendations without clear reasoning face rejection, especially in high-stakes domains where outcomes affect people’s health, finances, or safety. Stakeholders want AI’s benefits, but they demand rigorous safeguards against its risks, ones that should exist right before facing the final users. There’s no place for experimenting in production: trust patterns need to be imprinted in the AI product from scratch, or adoption might be (sometimes seriously) affected.

Users do not want AI to replace human judgment unless its reliability is fully guaranteed. Yet we know this level of certainty is very difficult to achieve in AI systems, given the inherent variability of input data, which can cause temporal drifts and unpredictable edge cases at test time, and the non-deterministic nature of some specific AI tools such as Large Language Models. Designing AI features therefore requires applying trust patterns from the very beginning, determining how to automate repetitive, tedious, time-consuming tasks to enhance human accuracy and speed, while preserving agency and accountability in the moments where they matter most.

So our goal was simple: put in place an AI design and development framework, to guarantee trustworthy AI right from the start.

The industry is facing a trust gap. Everyone agrees that, for users to see value in AI, it must be reliable, safe, secure, and explainable. Yet few know how to make that happen day to day, and in some cases, trust in AI is even eroding. For example, recent data from Harvard Business Review's 2025 workforce survey show that frontline employee trust in company-provided AI tools dropped sharply this year, declining 31% over just a few months, reflecting growing skepticism about AI’s reliability and value.

AI strategies are moving fast. Every product team wants to integrate some new, exciting AI feature. Yet, governance, measurement, and user-centric guidelines lag behind. As a result, systems that perform impressively in demos are not exploited to their full capacity by their final users, produce wrong outcomes under real-world conditions, or not even make it to production at all.

Beyond using AI or not, the question now is how to anticipate and mitigate the inherent risks that accompany any cutting-edge technology, risks made sharper here by AI’s unique accuracy challenges. The rapid progress and visibility of AI create a sense of urgency across industries, fueling fear of being left behind. Yet the AI market is saturated with unproven tools and inflated claims, and the financial, reputational and ethical risks are too high for blind adoption.

This mismatch creates three core challenges:

1.

We’re shipping faster than we can govern

2.

We acknowledge trust, but can't measure it - yet.

Everyone talks about AI adoption and the challenges associated with that. Similarly, the ethical aspects of AI are being extensively discussed in panels and roundtables both in industry and academia. But from all the dimensions of “Trustworthy AI”, few are actually translated into measurable, testable signals. Concepts like “User-centric AI” or “Responsible AI” remain aspirations rather than operational standards. Gartner Survey’s findings reinforce that maturity comes from measurability: teams that can define and track trust indicators such as reliability, safety, and transparency scale faster and adapt better to new regulations and market demands.

Notably, high-maturity organizations are far more likely to quantify the benefits and risks of their AI initiatives and thus sustain success over time.

As highlighted by Microsoft CEO Satya Nadella in a 2025 TechRadar interview, “If you don’t trust it, you’re not going to use it” underscoring the importance of tangible trust signals for adoption.

3.

Implementation is inconsistent.

Reliability, security, transparency, and safety vary widely across products, teams, and industries. In too many cases, trust depends more on luck than on process. Key pillars of trustworthy AI (transparency, reliability, safety, security, are not uniformly built into systems today, and the prevalence of bias, misinformation, and “black box” decision-making has made enterprises understandably cautious about adoption as mentioned by the World Economic Forum's 2025 article on how to scale AI Responsibly.

The shift underway is clear. We are moving from asking “Where should we use AI?” to ask “How do we design AI that people want to use and trust when it matters?” That shift requires, for each principle, new tools, shared language, and measurable frameworks. Not just high-level principles on paper.

AI models are likely to lose accuracy when deployed outside their training environments. This reality requires, on one hand, resilient products that can handle uncertain scenarios without causing user friction, and on the other, tools for organizations to monitor, control and gradually improve the AI-based solutions. The World Economic Forum notes that even seemingly minor mistakes can have outsized consequences in high-stakes contexts, elevating the need for systems that gracefully handle data drift, edge cases, and evolving conditions.

Furthermore, AI tools that generate excessive, disorganized output paradoxically increase workload rather than reducing it. When using an AI system requires sifting through irrelevant or incorrect results, the implementation costs quickly exceed the benefits. If using the AI means more time, effort, or cognitive burden for the user, they will abandon the system.

AI systems open new attack surfaces, from data leakage to prompt injection and model extraction. This demands secure-by-design architectures that limit exposure without slowing users, alongside robust tools to audit access, detect anomalies, and enforce policy. Gartner’s 2025 AI TRiSM Report identifies data compromise, malicious prompts, and model inaccuracies now top the list of AI-related risks for organizations. When users doubt the protection of their data or workflows, trust erodes and adoption stalls. Data protection and security therefore must be non-negotiable foundations of any AI feature, leaders need to prioritize privacy and compliance as “table stakes” in order to scale AI confidently in a fast-evolving regulatory landscape.

AI features that fail in unknown scenarios might produce unsafe, biased, or policy-breaking outputs that force users to double-check and correct results, adding friction instead of reducing it. If the effort to guard against harmful outcomes outweighs the benefit of automation, users will disengage or restrict usage to low-stakes tasks, sharply limiting the system’s real impact. In practice, organizations have learned that even one instance of a blatantly wrong or biased AI output can shatter user confidence permanently.

Why it matters is simple

Trust drives adoption. Adoption drives retention. Retention drives ROI.

Organizations that treat trust as a design principle, not a PR statement, will unlock sustainable growth. Business leaders are increasingly recognizing that trusted AI delivers tangible returns: for instance, when AI is grounded in accurate, secure data and transparent reasoning, it creates greater user trust, which drives higher adoption and ultimately ROI for the business.

The World Economic Forum’s 2025 outlook describes trust as “the new currency in the AI economy.” As AI agents and autonomous systems expand, WEF argues that trust will be the defining factor separating companies that lead from those left behind. Unlike hype-driven launches or one-off compliance efforts, trust compounds. It strengthens brand reputation, supports regulatory readiness, and builds long-term differentiation. Companies that engineer trust early will lead not only in innovation but in endurance, creating AI systems that people rely on, return to, and recommend. This is crucial for increasing implementation success rates, as even small errors, whether fabricated or inaccurate outputs, can permanently damage confidence.

Over the past months, we have spoken with more than 50 AI and product leaders across the United States and Europe, and observed actively what communities of users discuss, mostly within healthcare, our first testbed for our framework.

These conversations included CPOs, CTOs, startup founders, COOs, Heads of AI and ML, investors, product builders and R&D supporters, along with dozens of exchanges at HLTH 2025 Las Vegas, the biggest Health Conference in the USA, and other events we participated in.

We combined these stories from the trenches and AI Adoption reports with leading frameworks such as the EU AI Act and several global responsible AI reports to understand how trust is being built in practice.

In these conversations, we asked builders to replay real moments: the last AI feature they shipped, where trust felt shaky, and what made them slow down or say yes. We talked about what “reliable” means in their day-to-day, how they handle safety and security, and which explanations actually help people act. We dug into trade-offs (speed and accuracy in fully autonomous systems vs. human oversight in AI augmented applications), who gives the green light, and what breaks confidence.

Why Healthcare

Healthcare gave us the clearest view of where trust meets regulation. It is a sector where precision, compliance, and safety are not optional, they are the price of entry.

It also mirrors what is happening in other industries: everyone wants to move fast, yet without paying the cost of adoptions due to breaking trust. In high-stakes domains, trust compounds slowly but shatters instantly.

Across healthcare stakeholders (patients, clinicians, and administrators) trust emerged strongest when AI functioned as an augmentation tool rather than a replacement. Users don’t reject automation itself, they fear poor reliability, opacity, loss of control, and unclear accountability. Trust grows when systems are autonomous where they can be, and supervised where they should be.

The Core Insight

Everyone agrees that trust matters, but no one agrees on how to measure it.

Even the most advanced teams are still translating trust concepts into daily practice, sometimes biased by the ethical conversations around AI, sometimes focusing too much on the technical challenges. Evolving processes demand new roles, new ways of working and co-creating with final users, clear ownership, review steps, and day-to-day practices that are still missing in most teams.

Trust starts when AI works as expected, consistently, accurately, and predictably.

Before people believe in its potential, they need proof that it performs. But not as a one-fits-all solution: proof that it works for the goal it has, nothing more, nothing less.

Confidence grows when people understand how AI reaches its conclusions.

Clarity turns skepticism into collaboration, inviting users to engage rather than resist.

It endures only when systems are built on strong foundations of compliance and data protection.

If people can’t trust how their information is handled, they won’t engage at all.

As AI’s influence expands, responsibility must grow in parallel.

In high-risk use cases, safety takes precedence. The greater the potential for harm, the stronger the need for error management, adaptability, oversight, traceability, and human judgment.

Three Adoption Mindsets

Through our research in healthcare, we identified three recurring mindsets toward AI adoption. Although they emerged in a regulated setting, they reflect broader patterns seen across industries exploring AI integration and development.

When facing novelty and uncertainty, people adopt different thinking styles. These mindsets shape how organizations view risk and opportunity, and how they build and sustain trust over time.

Early Adopters

Already understand and trust the technology. Their focus is on proving reliability and scaling it effectively in real-world settings.

Explorers

Balance curiosity with caution. They see potential and don’t want to fall behind, but they’re still discovering where and how to apply it responsibly.

Skeptics

Remain unconvinced about the value or trustworthiness of the technology and prefer to wait for clearer evidence or regulation before engaging.

While these mindsets may resemble traditional technology adoption stages, they differ in focus. They describe not when organizations adopt AI, but how they approach trust, responsibility, and oversight as they do so.

Lead Adopters

Already integrating AI in production, this group focuses on accuracy, guardrails, and accountability, often implementing their own mechanisms for guaranteeing trust, applying extensive training and testing, even though they don't have a formal framework in place.

They are building systems that aim for reliability and transparency, improving quantitative evaluations, implementing cutting-edge strategies to maximize performance, increase grounding and reduce hallucinations, or to cope with ambiguities typical of non-deterministic models, all while ensuring that when errors occur, they fail gracefully rather than unpredictably.

Their main challenge lies in fragmented accountability and the constant evolution of both technology (to address these challenges) and expectations (not just from users but also from regulations).

They are driven by a sense of professional duty and credibility, proving that innovation and responsibility can coexist.

For early adopters, the hard part is scaling: keeping reliability, safety, and cost under control to keep guaranteeing usage grows.

AI Explorers

Curious but cautious, these teams are experimenting with AI, testing low-risk use cases before committing major resources.

They want proof that AI works, not promises, and they won’t do the leap of faith of reaching the final users unless that evidence is consistent. They look for measurable value, transparency and clear guidance.

They worry about reliability, data privacy, and the confusion caused by a crowded market filled with “AI-hyped” products.

Their motivation is cautious optimism, a belief in AI’s potential balanced by a fear of misstep or wasted investment.

For this group of people, market saturation, unclear differentiation, and bundling strategies create analysis paralysis.

Skeptics

Thoughtful resisters, they see the risks of AI or simply do not yet see its value.

They prioritize human interaction, safety, and fairness. Some lack clarity on how or where AI might fit, while others actively test systems to verify whether they can be trusted.

Their main fear is that AI will add confusion, extra work, bias, or simply damage their product’s reputation.

Their mindset is simple: if you cannot understand it, you cannot trust it; and if you cannot trust it, you shouldn’t implement it.

For this mindset, the barrier is not only technical implementation but the surrounding context. For teams adopting AI internally, the primary challenge is change management: people need training, clarity on accountability, and reassurance about how their roles will evolve.

For teams building customer-facing AI features, the challenge shifts toward user adoption: skeptics question why their users would trust or pay for features that may add errors or confusion. Change management still matters, but the priority becomes showing that the AI strengthens the product instead of disrupting it.

Beyond Healthcare

What is happening in healthcare is already spreading. The same dynamics appear wherever decisions carry real consequences for people or organizations.

Efficiency-Driven Industries

such as retail, logistics, manufacturing, and customer service prioritize reliability, uptime, and human escalation when AI reaches its limits.

Mission-Driven Domains

such as education, nonprofits, and accessibility initiatives build trust through fairness, affordability, and transparency.

Highly Regulated Sectors

such as finance, insurance, law, human resources, and government all require explainability, audit trails, and clear ownership of responsibility.

The Takeaway

Healthcare shows what happens when innovation meets regulation head on.

It is a stress test for trustworthy AI and a preview of what every industry will soon face, either through new regulations or through user expectations.

While priorities differ by domain, technology buyers converge on common concerns: clear ownership of risk, transparent data handling, defined failure playbooks, and live performance monitoring.

For adoption to accelerate, trust becomes the real differentiator.

Organizations that learn to measure it, design for it, and operationalize it will define the next phase of AI maturity.

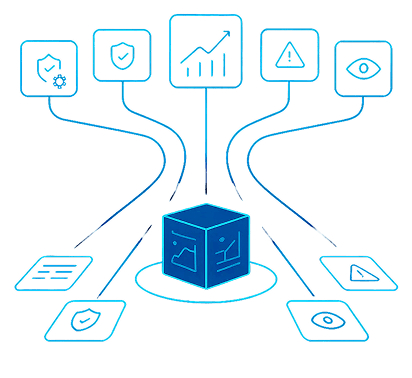

Trust OS Framework bridges the gap between the high-level ambition of building trustworthy AI and the practical realities of implementing it. It turns trust into a design principle, something measurable, repeatable, and embedded in every stage of the product lifecycle, from research and ideation to deployment and continuous improvement.

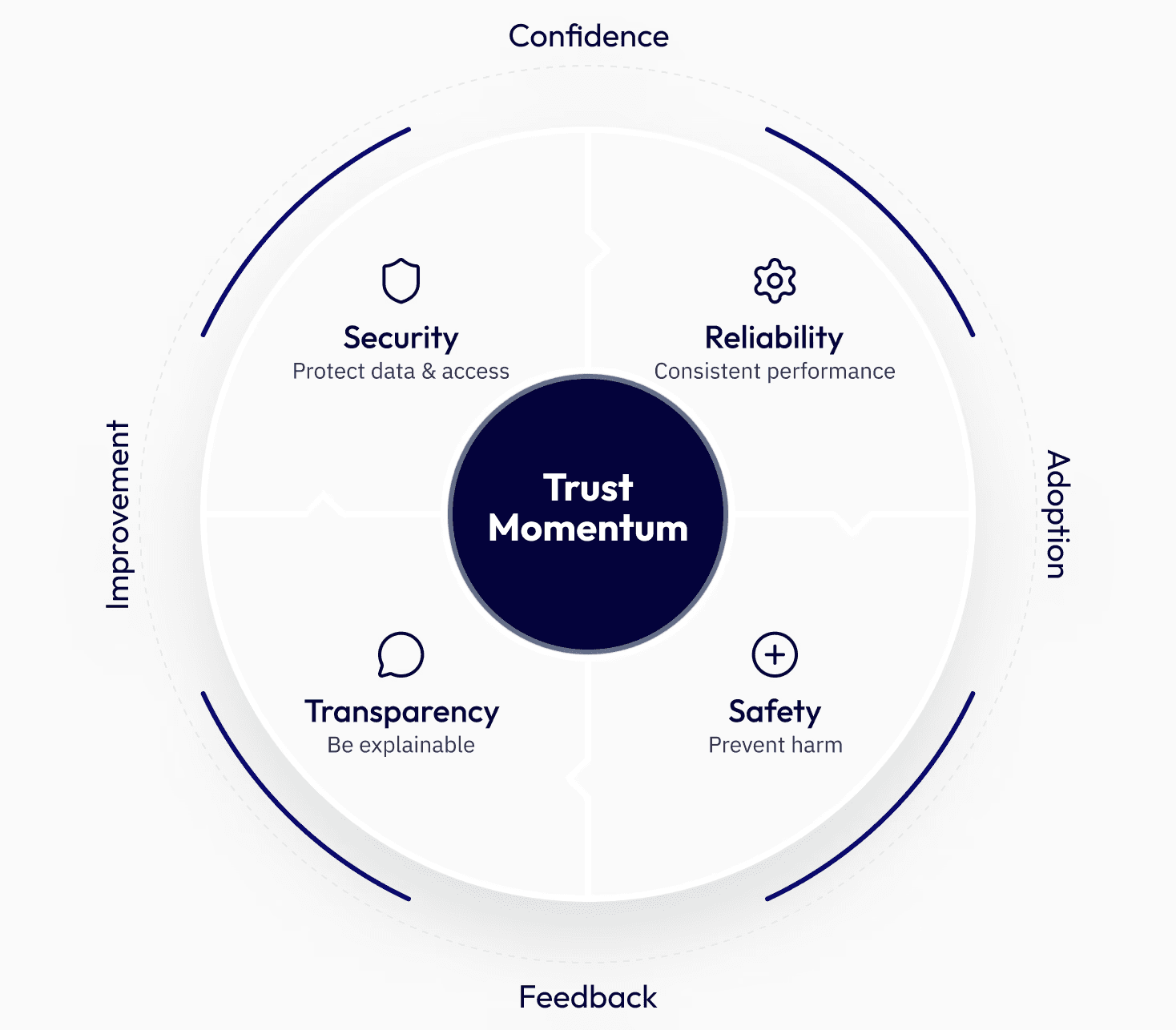

At its foundation are four interdependent pillars that together create the conditions for sustained trust.

Reliability

ensures accuracy, speed and performance consistent with user expectations, building credibility around where the tool can actually make a difference, accelerating adoption.

Transparency

enables users to understand why AI produced specific outputs, i.e. by making reasoning and decision-making visible, or incorporating explainability tools that enable human interpretation, collaboration and ongoing product improvement.

Safety

prevents harmful situations that might arise from expected errors, misuse of the application, etc., through proactive incorporation of guardrails, oversight, feedback loops and design constraints, sustaining trust and driving continued use and feedback.

Security

protects users from systems deviating from the original intended use, keeping data and access safe while embedding integrity into infrastructure and establishing the foundation for confidence.

Trust Flywheel

© 2025 Arionkoder. All rights reserved.

Trust Patterns: Proven solutions for recurring trust gaps

If the pillars define the principles, Trust Patterns define the practice.

A Trust Pattern is a small, reusable design solution that solves a recurring trust challenge in AI systems. Each one turns a challenge that affects one or more of the principles — security, reliability, safety, or transparency — into a concrete, validated mechanism that teams can implement in a product, test in the field, and evaluate with objective measurements.

Trust Patterns make trust operational. They translate risks and challenges detected with the framework into tangible features, workflows, and checks that ensure systems are not only compliant on paper but trustworthy in behavior. Each pattern is general enough to apply across architectures and industries, yet specific enough to guide measurable implementation. Furthermore, their modular design enables seamless integration with minimum changes, saving costs and effort while enabling resilience and trustworthiness.

Key Characteristics and Requirements for Trust Patterns

Actionable, not abstract: Each pattern is a concrete implementation approach, not just a principle. For example, Red Flag Detection & Human Escalation is a pattern; “be safe” is not.

Addresses a human mental model: Patterns respond to how users actually evaluate trust i.e. under the form of a risk: Does it work well? What happens when it fails? Can I understand it? Can I control it?

Reusable across contexts: The same pattern (like Confidence Scoring, Audit Trails, or Graceful Degradation) can be adapted across industries and use cases.

Measurable: Patterns can be instrumented with metrics such as success rates, escalation frequency, or explanation clarity scores. If you cannot assign a metric to the pattern, double check again.

Built-in, not added: They are embedded in the system architecture from the start, not bolted on as documentation or compliance afterthoughts. It has to be a materialization of a risk mitigation effort, not just a concept.

Patterns can be combined to form a Trust Blueprint for a product, feature, or agent. They are modular, testable, and reusable, ensuring every AI product can scale trust systematically. This is how trustworthiness is operationalized, the bridge between “AI should be trustworthy” and “here’s exactly how we build that into the system.”

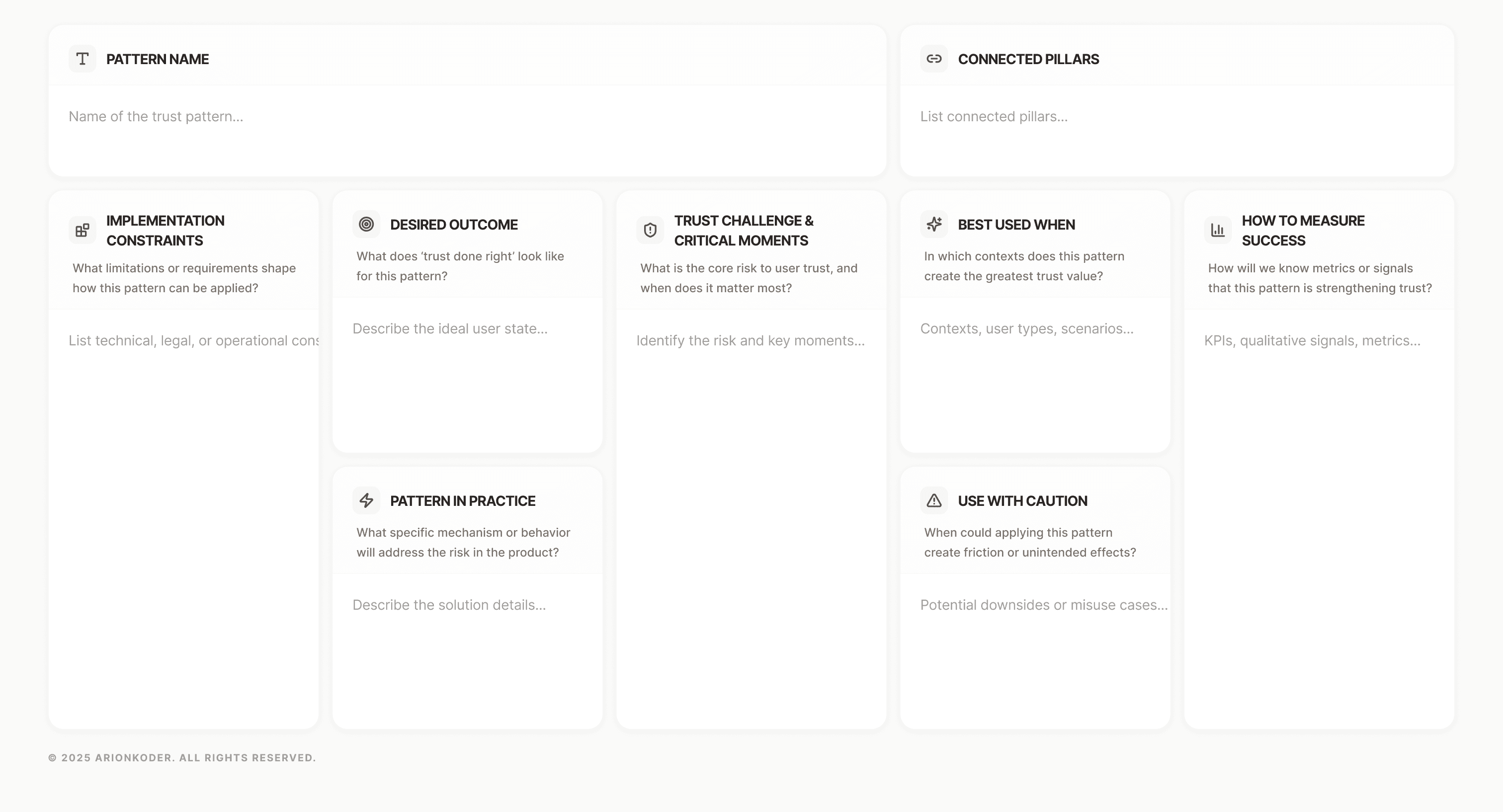

Structure of a Trust Pattern

Each pattern follows a consistent structure that helps teams understand both why trust is at risk and how to preserve it:

What is the core risk to user trust, and when does it matter most?

What does ‘trust done right’ look like for this pattern?

What limitations or requirements shape how this pattern can be applied?

What specific mechanism or behavior will address the risk in the product?

In which contexts does this pattern create the greatest trust value?

When could applying this pattern create friction or unintended effects?

How will we know metrics or signals that this pattern is strengthening trust?

Each pattern reinforces one or more Trust Pillars. Some focus on a single dimension, while others bridge multiple aspects. Together, they form adaptable and systemic solutions that teams can combine to make trust an inherent part of their AI design language.

The Trust Patterns library is a living system. New patterns emerge through field testing and are validated through measurable impact before being added to the catalog. In this way, trust becomes not a static checklist but a continuously improving practice.

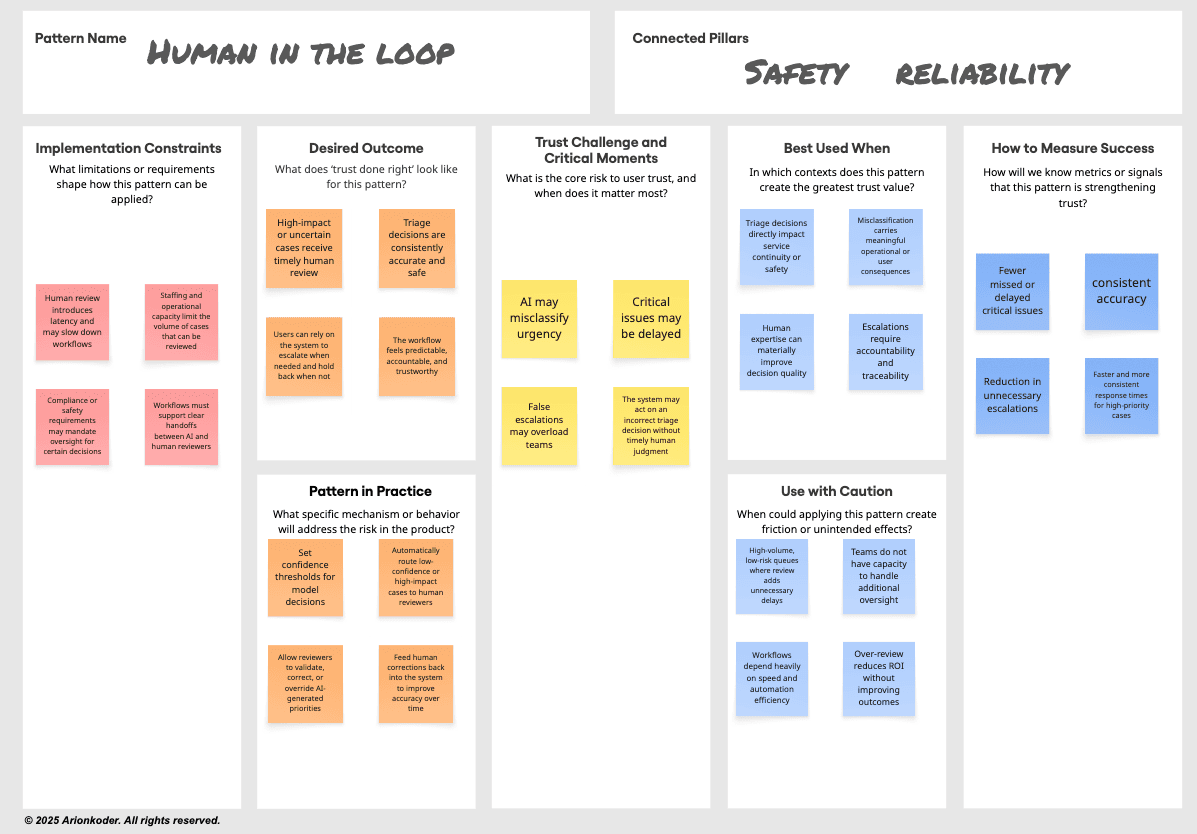

For instance, the Human-in-the-Loop principle emerged as non-negotiable across all stakeholder groups. It prevents full autonomy, ensures supervision and override capabilities, and strengthens collaboration between humans and AI, rather than shifting all responsibility to the machine. Trust emerges strongest when AI functions as augmentation, not replacement.

Examples of Trust Patterns

Here’s how Trust Patterns translate from framework to real application.

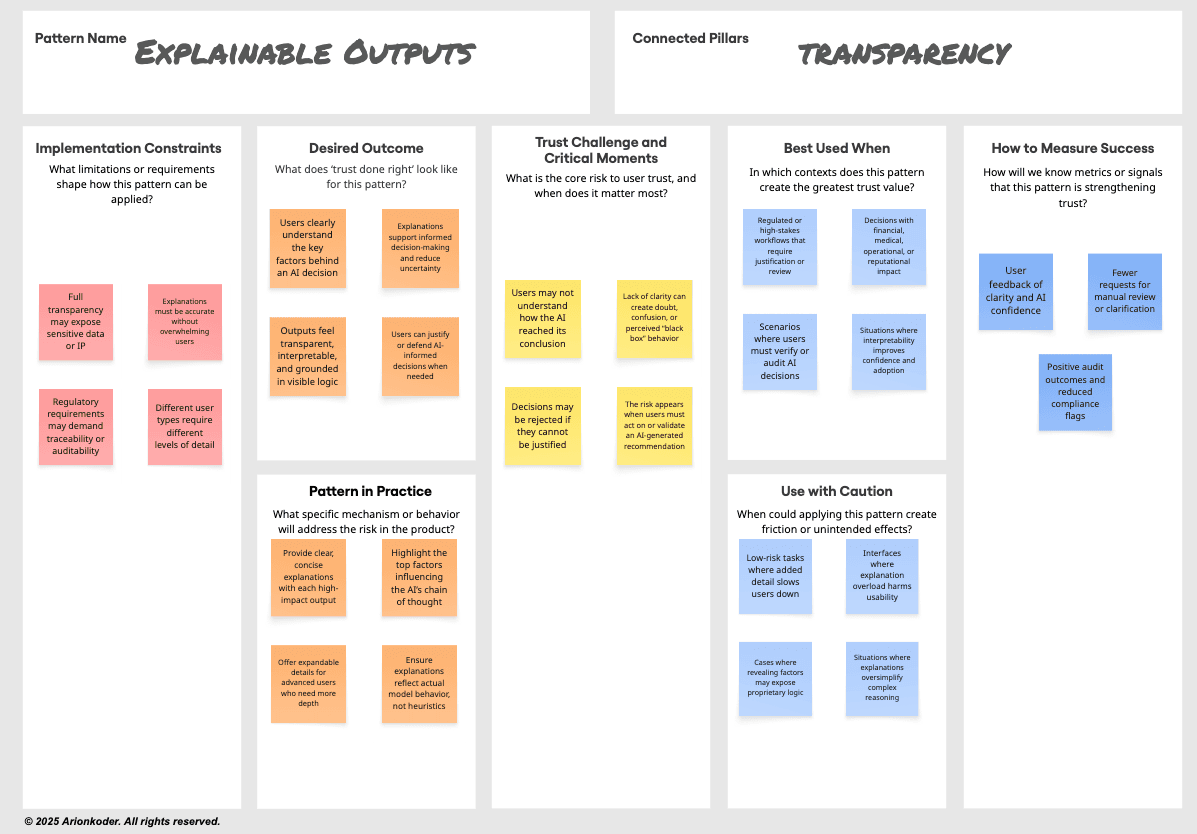

In the case of a Triage Agent, an AI system that classifies and prioritizes support or incident tickets, the trust pillars are critical. These systems directly influence how quickly and accurately issues are addressed, which can affect customer satisfaction, service continuity, and even safety in high-stakes situations. When the AI misclassifies or escalates incorrectly, the consequences are immediate and tangible, making reliability, safety, and transparency non-negotiable.

The following three examples show how LLM as a Judge Evaluator, Human in the Loop and Explainable Outputs can be embedded to make the system more reliable, transparent and safe ensuring humans understand, oversee, and confidently act on the AI’s decisions.

LLM as a Judge Evaluator

Explore the Trust Pattern Library

Get early access to new Trust Patterns and tools to help you build trustworthy AI systems.

Join Free Trust Workshops

Learn how to identify trust needs, map trust moments, and apply the Trust OS Framework.

The Implementation Path shows how the Trust by Design framework connects with business strategy, product discovery, and delivery. It turns trust from an abstract principle into an operational implementation process that teams can follow from the very beginning to materialize user requirements.

At Arionkoder, we embed user adoption considerations early in the research and discovery phase, when product opportunities and risks are first identified. This ensures that trust is not added later, compromising adoption, but designed into the foundation of every system component, giving birth to more robust and trustworthy AI platforms.

While the previous section focused on the micro level, showing how individual patterns solve specific challenges, this section outlines the macro journey that moves an organization from intent to execution.

These steps demonstrate the entire process, from assessing readiness to internal enablement and communication. Over time, trust becomes a natural part of how products are conceived and built. It evolves from an external check into a shared mindset that shapes culture, accelerates adoption, and amplifies the value of AI across the organization.

The Trust OS Framework Implementation Path

The implementation path illustrates how organizations develop trustworthy AI step by step, aligning technical decisions with user needs, business goals, and continuous learning.

7. Communicate Trust

Make trust needs and adoption challenges explicit across teams and with clients. Clearly articulate the risks, the user concerns, and how the framework addresses them so everyone understands why these mechanisms matter.

6. Train and Enable Teams

Build internal capabilities around trustworthy AI by design. Provide training, playbooks, and processes that empower teams to identify and apply trust mechanisms consistently.

5. Integrate Observability

Embed monitoring mechanisms and dashboards that track key trust indicators for the four pillars. Observability transforms trust from a static goal into a continuous practice.

4. Define Solutions and Trust Patterns

Match technical and UX layers with the appropriate Trust Patterns. Each solution should make trust measurable and visible in both system behavior and user experience.

3. Align Trust Needs with Business KPIs and User Goals

Map user flows to identify critical trust moments, points where reliability, safety, transparency or security are most essential. Align these with business metrics and outcomes of problem understanding to ensure trust directly supports performance and adoption.

2. Understand the Problem

Define the core business challenge and determine where AI adds real value. Frame the opportunity in terms of outcomes, risks, and user impact. Assess the potential impact of system errors to adjust accuracy thresholds/requirements.

1. Assess Readiness

Map existing AI use cases, data maturity, and governance gaps. Understand the current state of systems and processes to identify where trust risks may emerge.

It might have a learning curve at the beginning, as teams adjust to new ways of framing problems and embedding trust signals. Yet with repetition, the process becomes increasingly efficient and intuitive. Each iteration strengthens alignment, reinforces consistency, and builds confidence, while increasing the pool of Trust Patterns to your specific goals. Over time, trust ceases to be an additional layer and becomes an inherent part of how products are designed and how the organization operates.

AI’s future will be defined by organizations that guarantee AI adoption. And the best way for users to keep leveraging AI tools is for these systems to earn lasting trust. The organizations that lead will be those that implement AI by treating trust as an operating system, embedded in every decision, interaction, and line of code of their solutions.

This research has shown how to move from awareness to execution, from recognizing the trust gap to operationalizing reliability, safety, transparency, and security through repeatable mechanisms.

The next step is application.

Arionkoder’s Trust OS Framework and Trust Patterns Library offer a practical way to turn principles into measurable action. They help teams design for trust from the very beginning, making it observable, testable, and scalable. With each cycle, the process becomes faster, more intuitive, and more aligned with both user expectations and business goals. Trust stops being an initiative and becomes part of how the organization thinks and builds.

Meet the authors

© 2025 Arionkoder.

All rights reserved.

Email: hello@arionkoder.com

Offices: Americas · EMEA (5 offices)

This site respects your device’s accessibility settings, including Reduce Motion, and reduces animations when that option is enabled.