Applied AI

Product & Design

Democratizing Early Breast Cancer Detection

Arionkoder helped iSono Health improve the app and AI behind its 3D breast scanner for early cancer detection, all in just 8 weeks. The new design reduced false positives and review time, helping doctors make faster, more confident decisions.

Arionkoder helped iSono Health improve the app and AI behind its 3D breast scanner for early cancer detection, all in just 8 weeks. The new design reduced false positives and review time, helping doctors make faster, more confident decisions.

Applied AI

Product & Design

Democratizing Early Breast Cancer Detection

Arionkoder helped iSono Health improve the app and AI behind its 3D breast scanner for early cancer detection, all in just 8 weeks. The new design reduced false positives and review time, helping doctors make faster, more confident decisions.

ALIGN

We began by deeply understanding ATUSA’s context: the product, the technology, and the people who rely on it.

Our team interviewed radiologists, breast-cancer surgeons, and imaging specialists to capture their expectations, frustrations, and level of trust in AI. We also reviewed industry studies showing how ABUS-based AI can reduce unnecessary recalls compared to standard mammography — framing AI not as a novelty, but as a potential game-changer in breast cancer detection.

In parallel, we ran a full Design Sprint approach supported by interviews and benchmarking to understand both iSono’s internal challenges and the realities faced by their end users.

ALIGN

We began by deeply understanding ATUSA’s context: the product, the technology, and the people who rely on it.

Our team interviewed radiologists, breast-cancer surgeons, and imaging specialists to capture their expectations, frustrations, and level of trust in AI. We also reviewed industry studies showing how ABUS-based AI can reduce unnecessary recalls compared to standard mammography — framing AI not as a novelty, but as a potential game-changer in breast cancer detection.

In parallel, we ran a full Design Sprint approach supported by interviews and benchmarking to understand both iSono’s internal challenges and the realities faced by their end users.

ALIGN

We began by deeply understanding ATUSA’s context: the product, the technology, and the people who rely on it.

Our team interviewed radiologists, breast-cancer surgeons, and imaging specialists to capture their expectations, frustrations, and level of trust in AI. We also reviewed industry studies showing how ABUS-based AI can reduce unnecessary recalls compared to standard mammography — framing AI not as a novelty, but as a potential game-changer in breast cancer detection.

In parallel, we ran a full Design Sprint approach supported by interviews and benchmarking to understand both iSono’s internal challenges and the realities faced by their end users.

PRIORITIZE

From this discovery work, we identified two high-impact workstreams:

UX/UI workstream

Focus: streamline radiologist workflows, reduce friction and clicks, and surface the most relevant images first to reduce review time and cognitive load.AI/ML workstream

Focus: reduce false positives, improve confidence scores, and ensure that model performance improvements translated into real downstream clinical value.

We conducted qualitative and quantitative research, stakeholder interviews, usability testing, and industry benchmarking to refine the interface across three key stages of the radiologist journey. In AI/ML, we audited training and validation data, reviewed and tested the model’s training code, and quantified false positives across cases.

PRIORITIZE

From this discovery work, we identified two high-impact workstreams:

UX/UI workstream

Focus: streamline radiologist workflows, reduce friction and clicks, and surface the most relevant images first to reduce review time and cognitive load.AI/ML workstream

Focus: reduce false positives, improve confidence scores, and ensure that model performance improvements translated into real downstream clinical value.

We conducted qualitative and quantitative research, stakeholder interviews, usability testing, and industry benchmarking to refine the interface across three key stages of the radiologist journey. In AI/ML, we audited training and validation data, reviewed and tested the model’s training code, and quantified false positives across cases.

PRIORITIZE

From this discovery work, we identified two high-impact workstreams:

UX/UI workstream

Focus: streamline radiologist workflows, reduce friction and clicks, and surface the most relevant images first to reduce review time and cognitive load.AI/ML workstream

Focus: reduce false positives, improve confidence scores, and ensure that model performance improvements translated into real downstream clinical value.

We conducted qualitative and quantitative research, stakeholder interviews, usability testing, and industry benchmarking to refine the interface across three key stages of the radiologist journey. In AI/ML, we audited training and validation data, reviewed and tested the model’s training code, and quantified false positives across cases.

Prove

With priorities clear, we moved to prove impact in both tracks:

UX/UI track

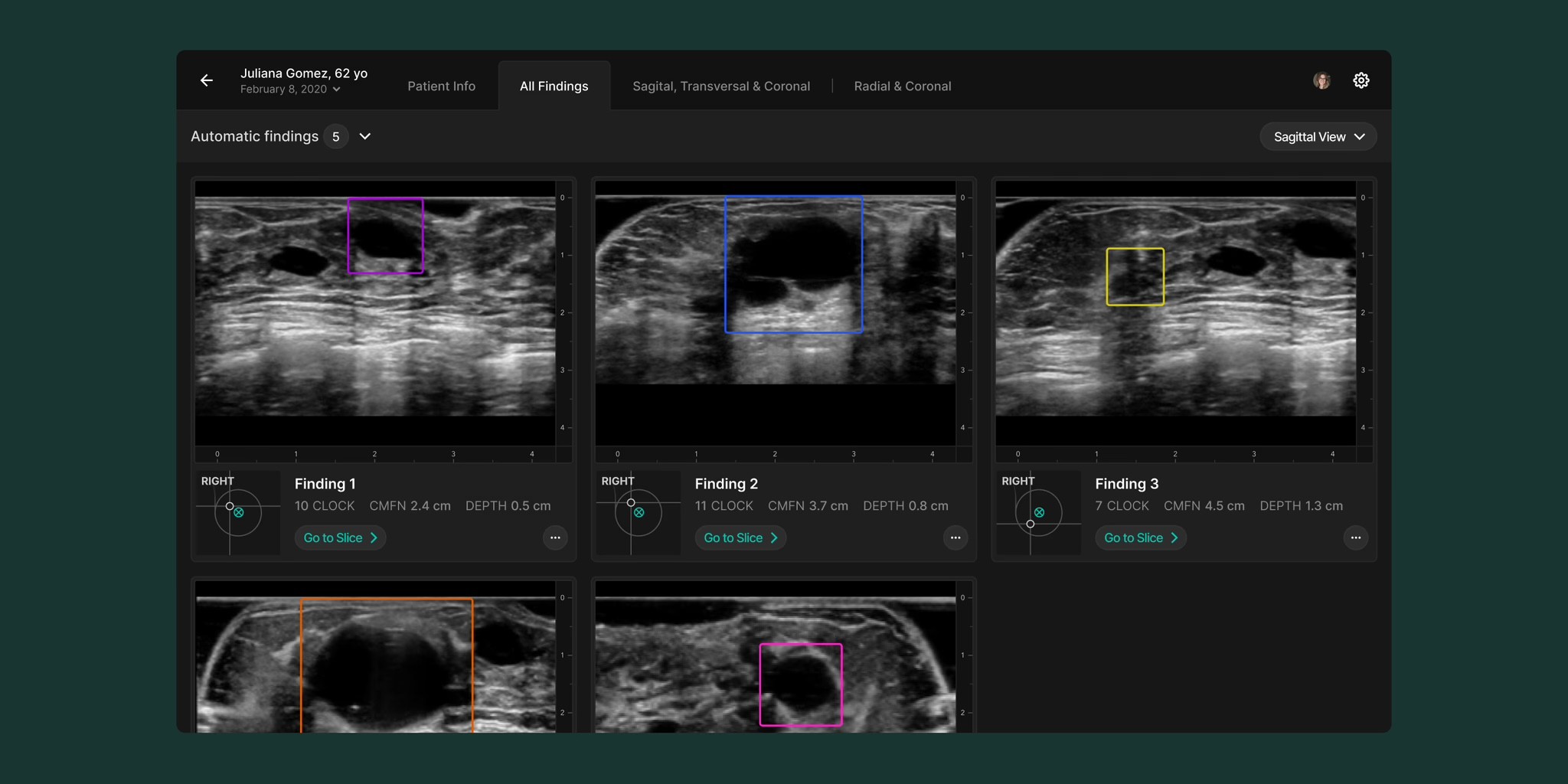

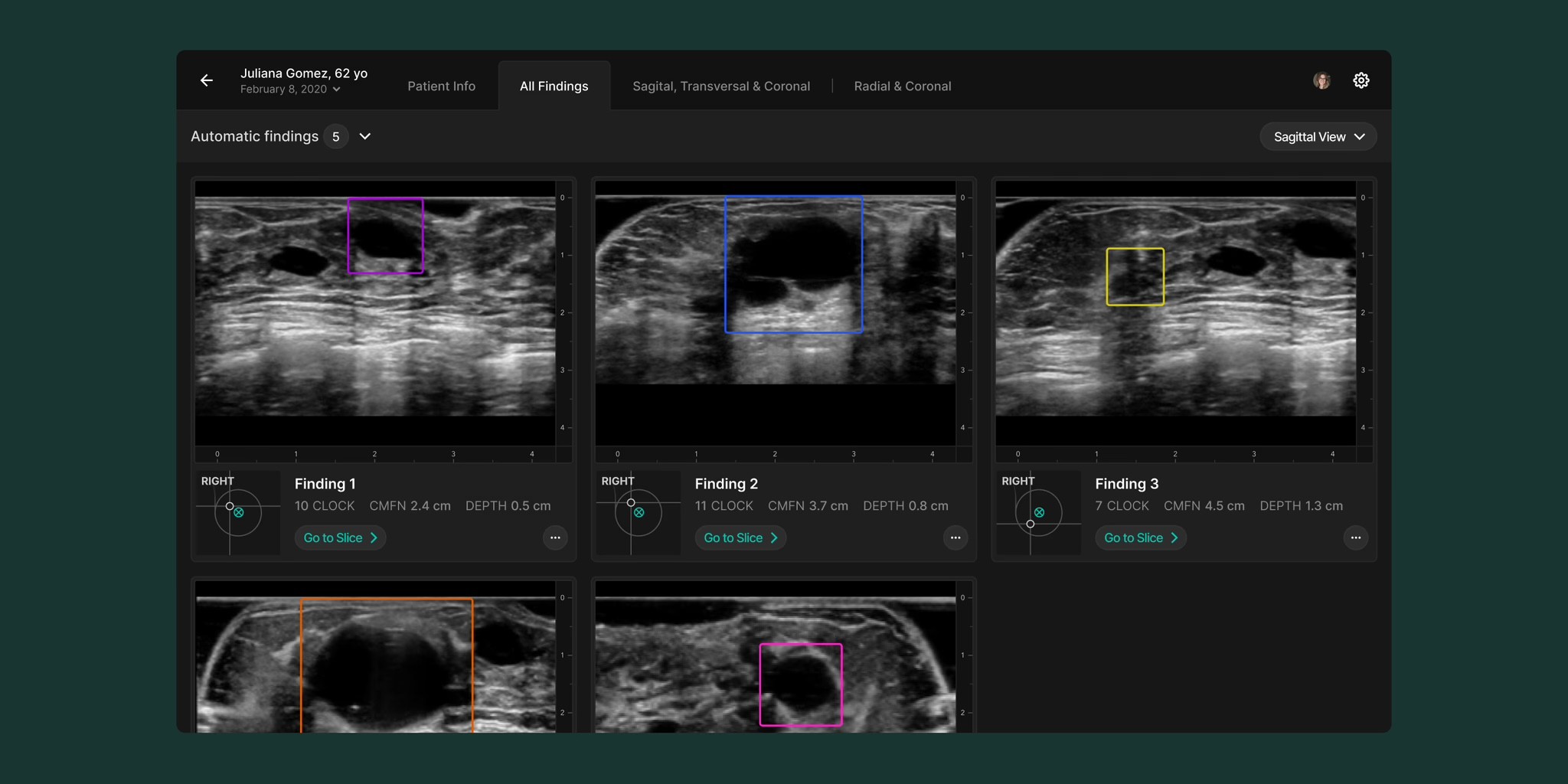

We streamlined the workflow by reducing clicks and removing friction, enabling doctors to access critical 3D images and AI-marked insights faster. This helped them more quickly distinguish between easy and complex cases, significantly reducing time spent per review.

AI/ML track

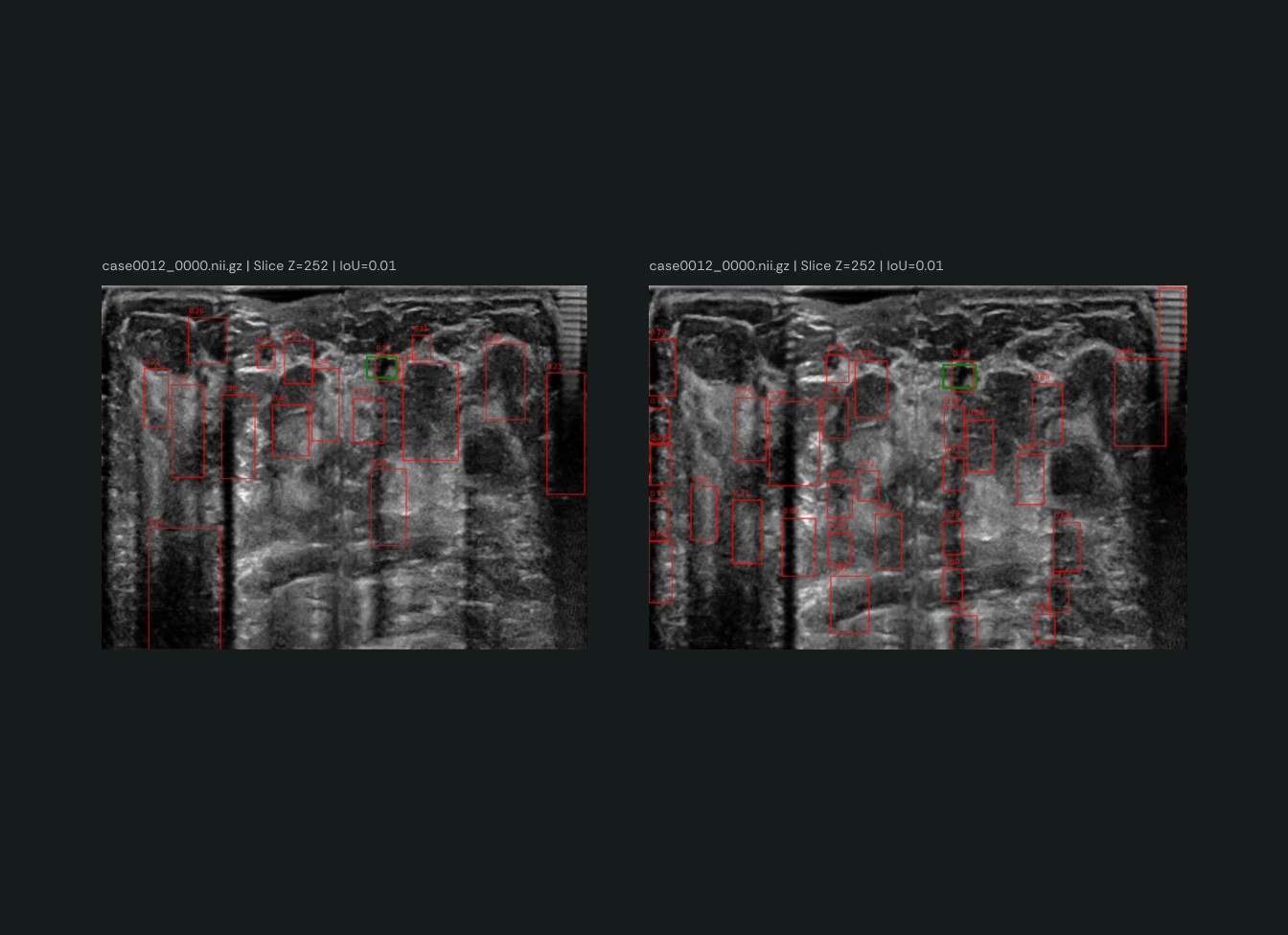

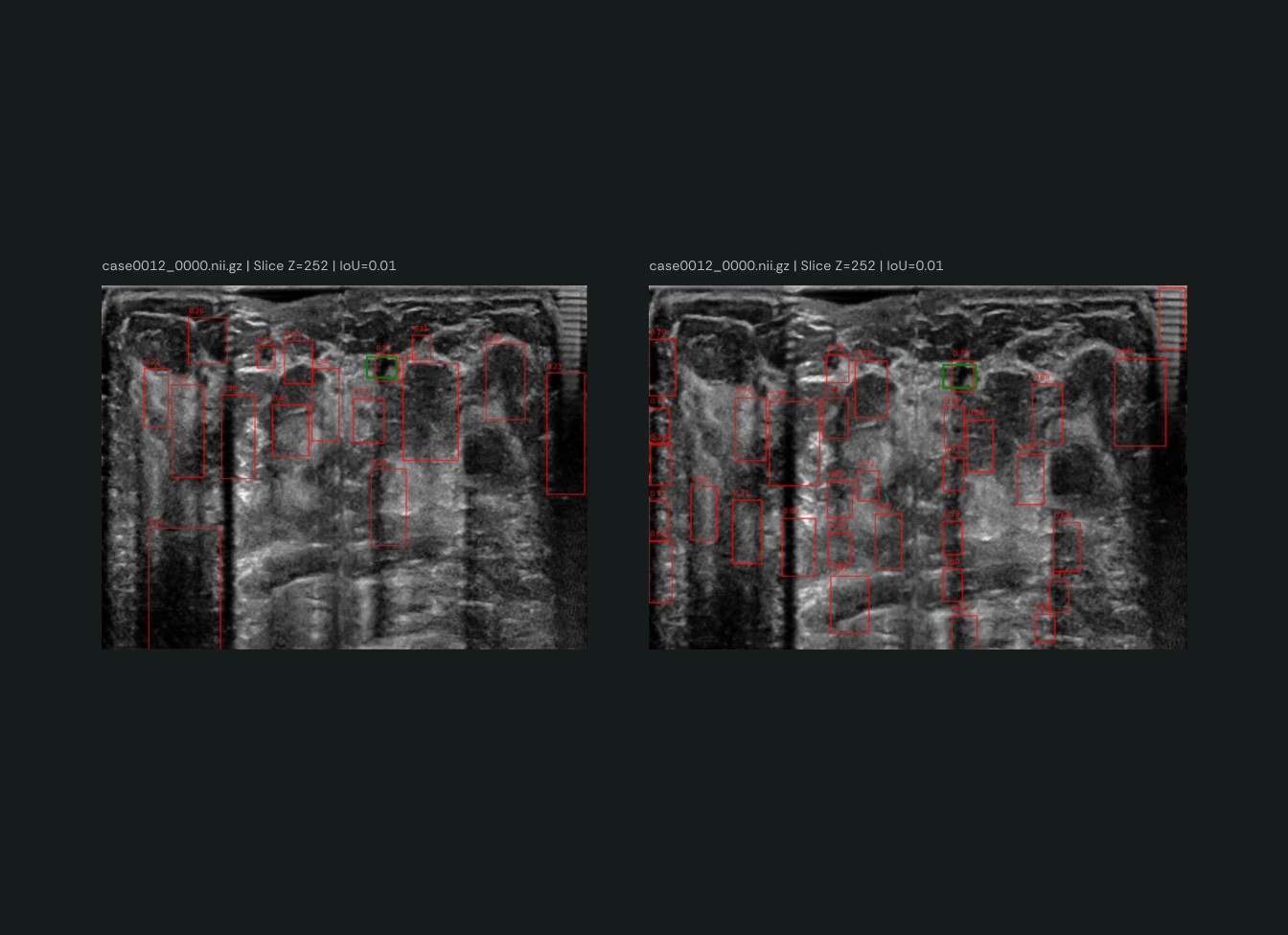

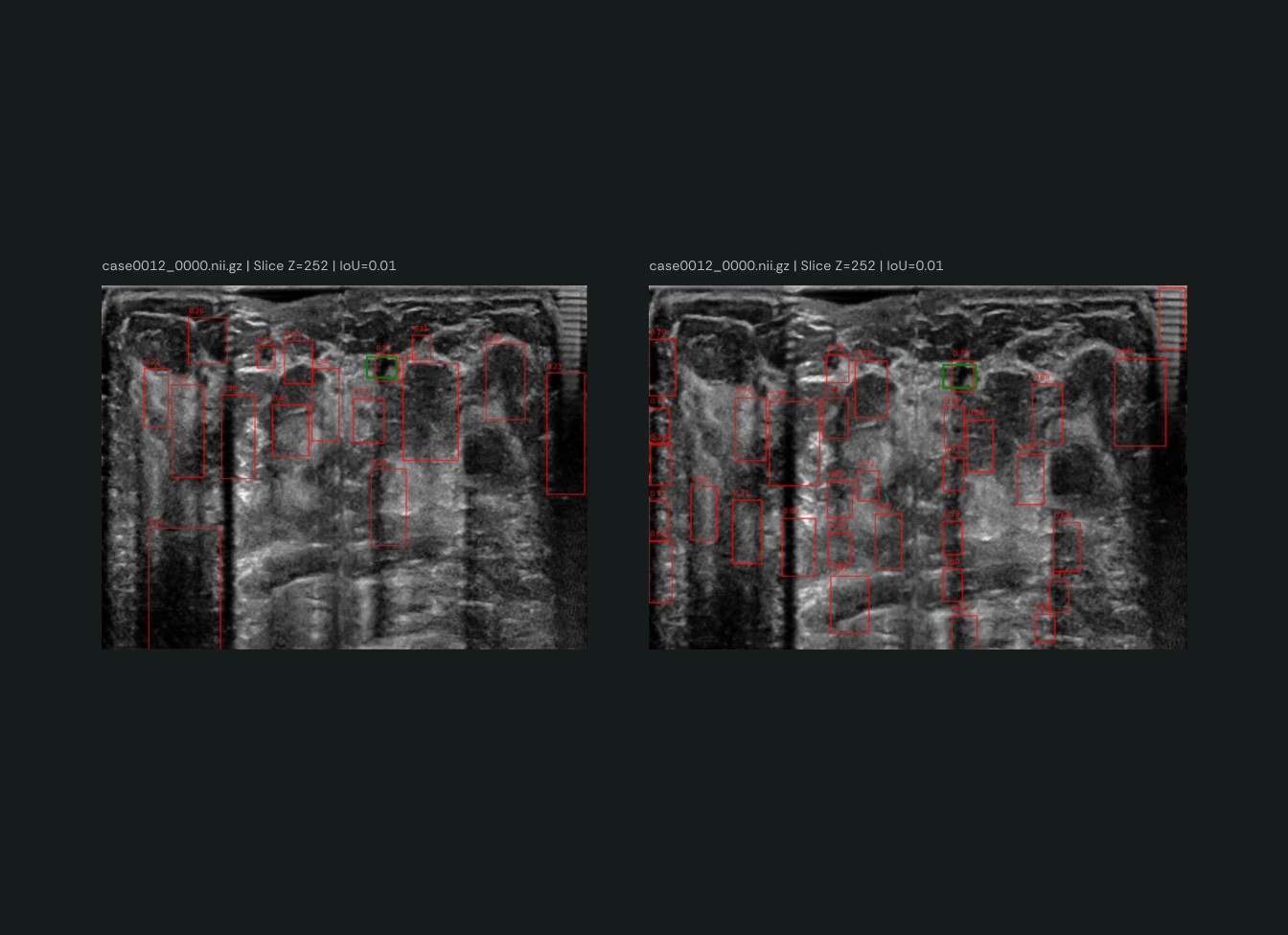

We introduced targeted modifications to the learning process — including hyperparameter changes within the focal loss function and experiments to assess their contribution.

As a result, we:Boosted model accuracy from 70% to 90% (a 28.6% relative improvement)

Achieved a 28.7% reduction in false detections

This led to faster, more trustworthy scan reviews and more reliable confidence scores.

We validated our UX and AI improvements through 4 prototype iterations and 2 rounds of user testing, plus a deep dive into radiologist workflows to ensure changes mapped to real practice.

4 prototype iterations

2 rounds of user testing

A deep dive into the radiologist workflow

Prove

With priorities clear, we moved to prove impact in both tracks:

UX/UI track

We streamlined the workflow by reducing clicks and removing friction, enabling doctors to access critical 3D images and AI-marked insights faster. This helped them more quickly distinguish between easy and complex cases, significantly reducing time spent per review.

AI/ML track

We introduced targeted modifications to the learning process — including hyperparameter changes within the focal loss function and experiments to assess their contribution.

As a result, we:Boosted model accuracy from 70% to 90% (a 28.6% relative improvement)

Achieved a 28.7% reduction in false detections

This led to faster, more trustworthy scan reviews and more reliable confidence scores.

We validated our UX and AI improvements through 4 prototype iterations and 2 rounds of user testing, plus a deep dive into radiologist workflows to ensure changes mapped to real practice.

4 prototype iterations

2 rounds of user testing

A deep dive into the radiologist workflow

Prove

With priorities clear, we moved to prove impact in both tracks:

UX/UI track

We streamlined the workflow by reducing clicks and removing friction, enabling doctors to access critical 3D images and AI-marked insights faster. This helped them more quickly distinguish between easy and complex cases, significantly reducing time spent per review.

AI/ML track

We introduced targeted modifications to the learning process — including hyperparameter changes within the focal loss function and experiments to assess their contribution.

As a result, we:Boosted model accuracy from 70% to 90% (a 28.6% relative improvement)

Achieved a 28.7% reduction in false detections

This led to faster, more trustworthy scan reviews and more reliable confidence scores.

We validated our UX and AI improvements through 4 prototype iterations and 2 rounds of user testing, plus a deep dive into radiologist workflows to ensure changes mapped to real practice.

4 prototype iterations

2 rounds of user testing

A deep dive into the radiologist workflow

Integrate

To make these improvements usable in the real world, we focused on integration:

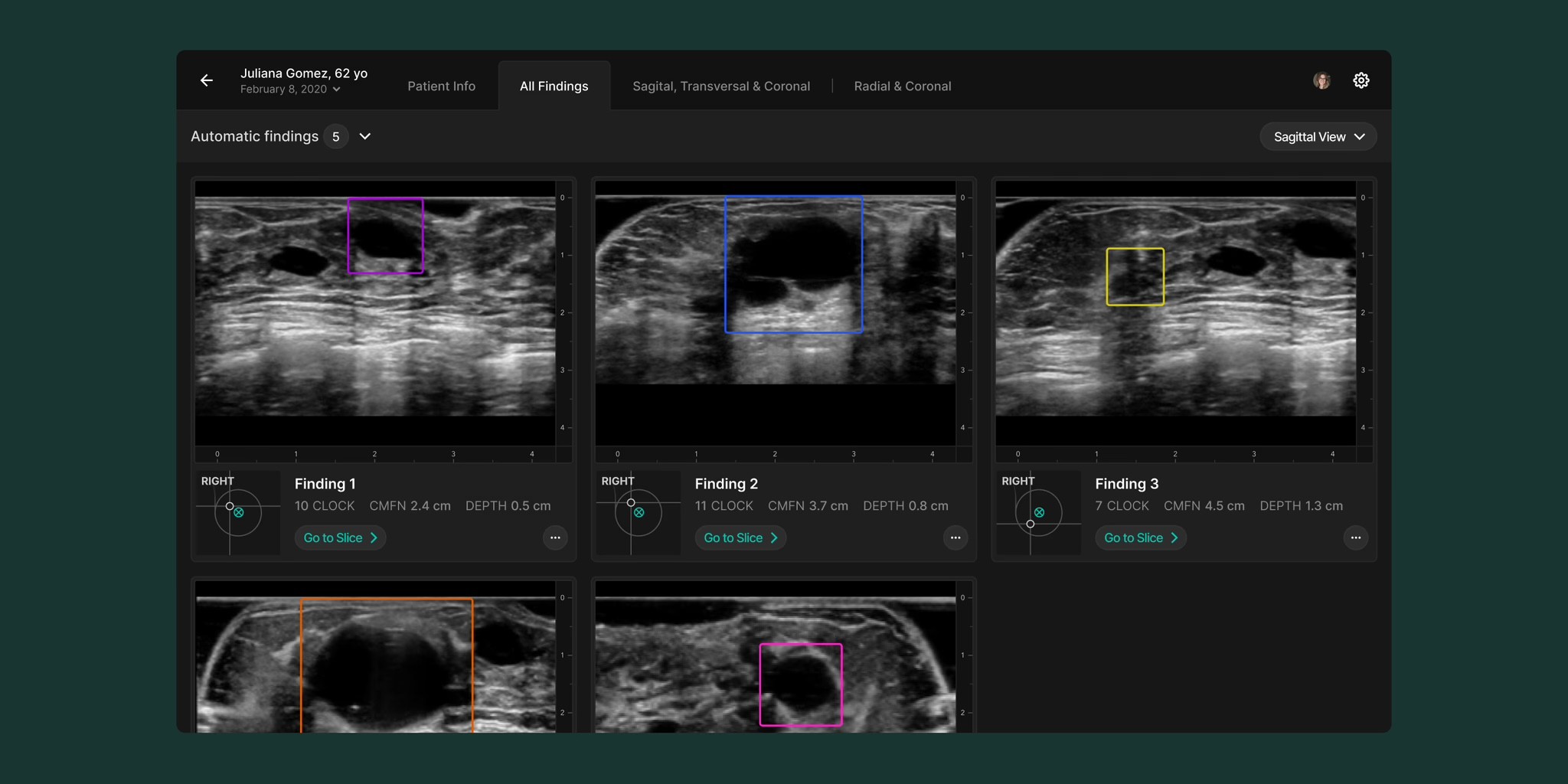

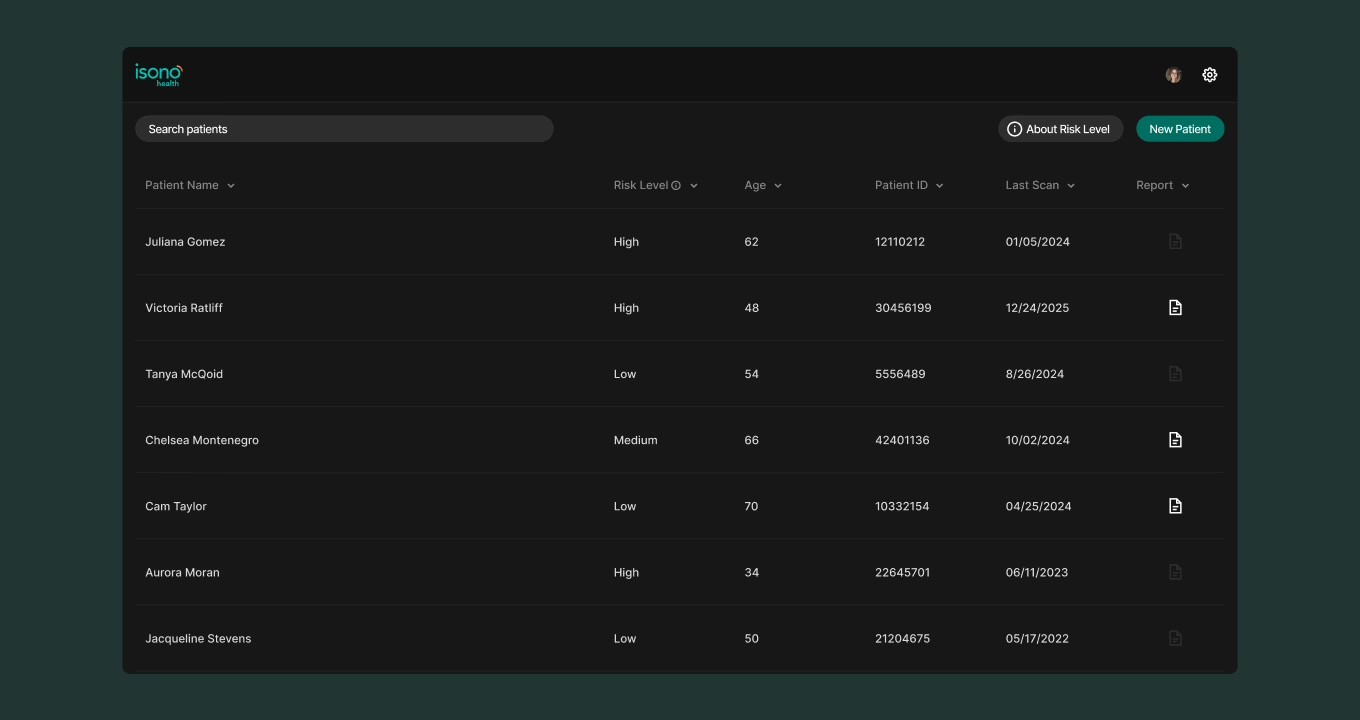

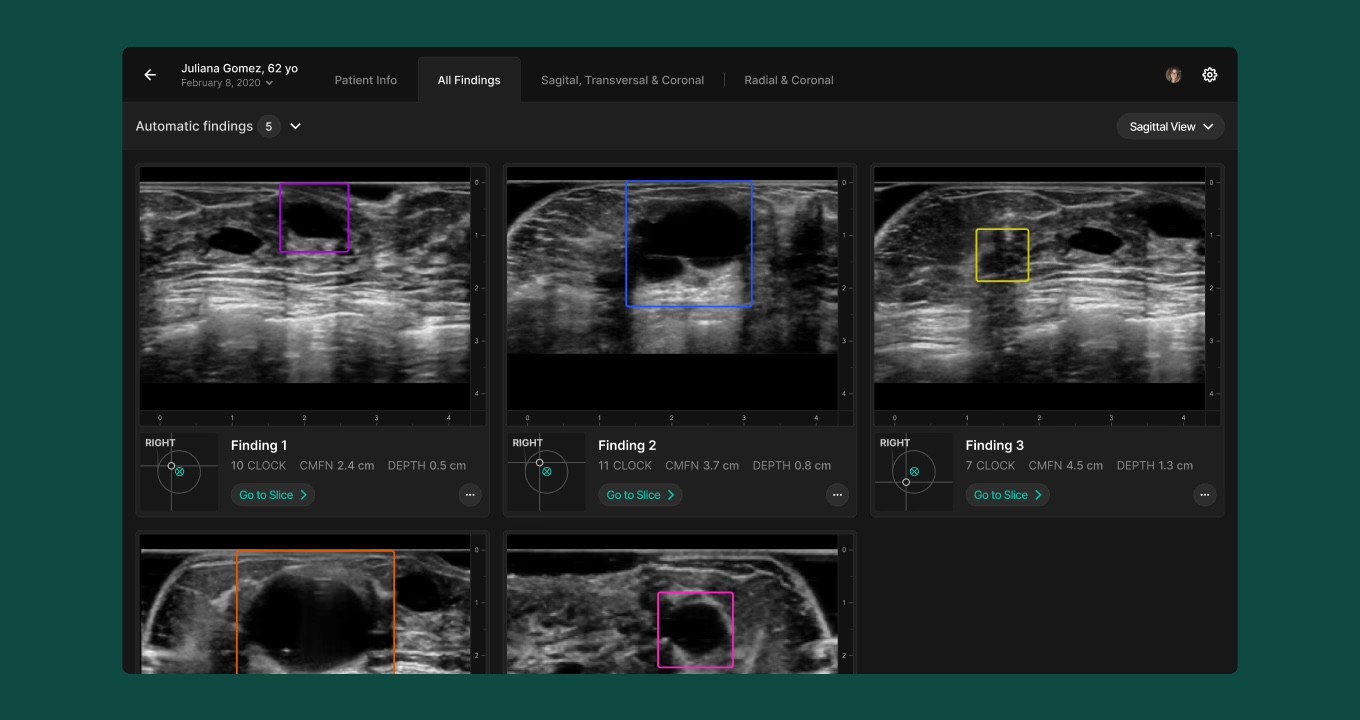

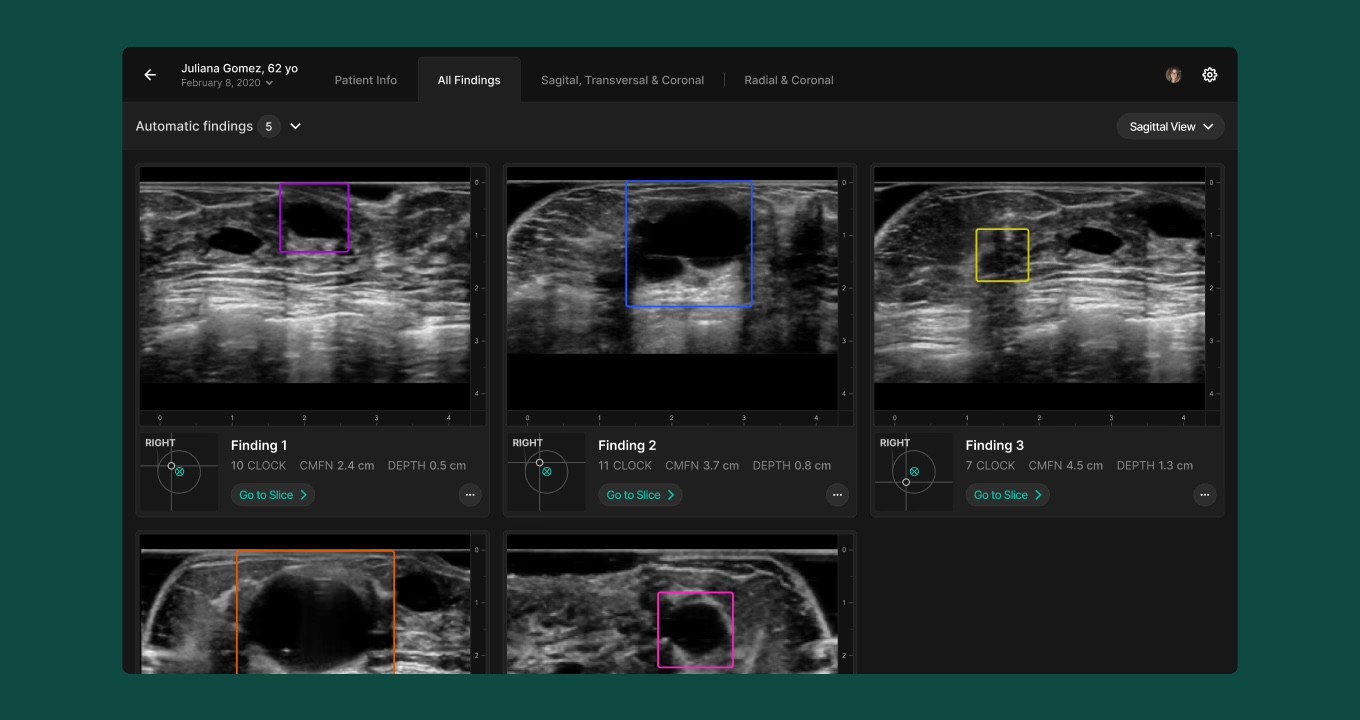

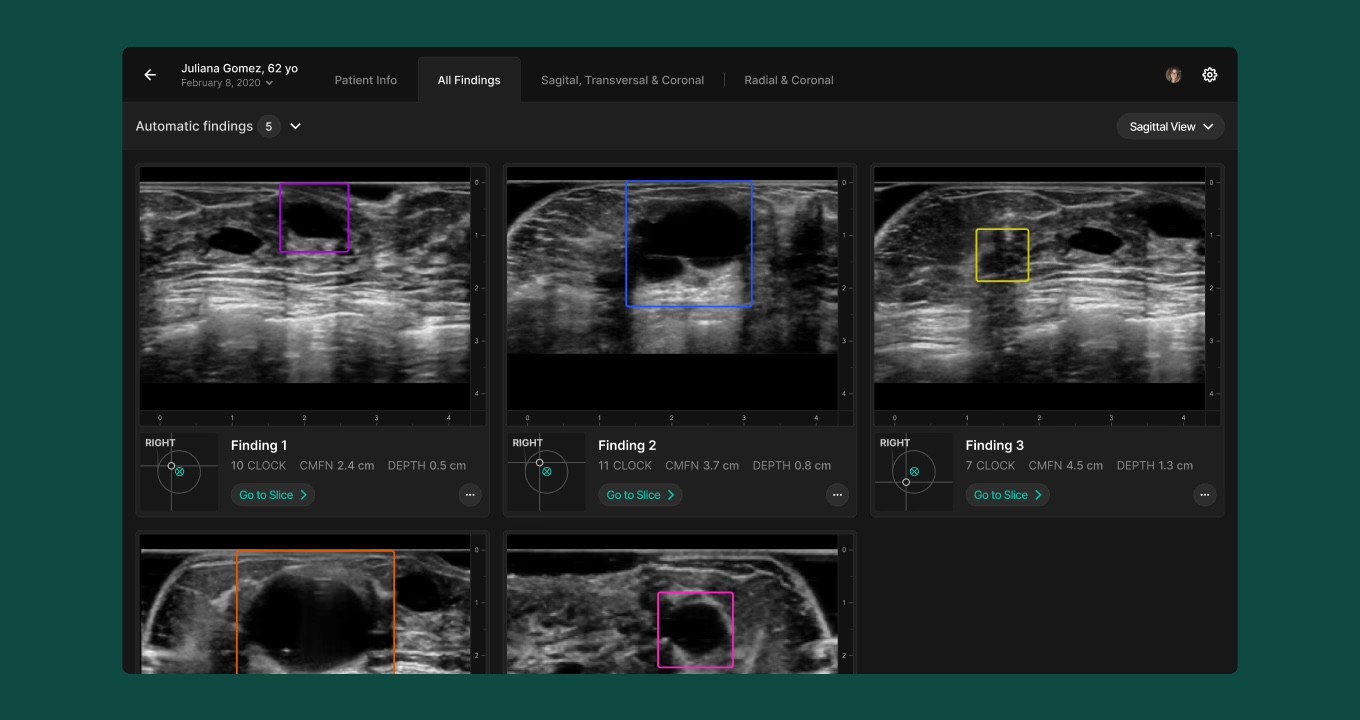

In UX/UI, we redesigned the review interface to surface only the most relevant 3D images (those flagged by AI), with an option to expand into a multi-plane comparison mode. The redesigned Scrubber allows radiologists to navigate slices quickly and correlate findings across planes, improving interpretation speed and clarity.

In AI/ML, we built internal evaluation tools using Jupyter notebooks and prototyped lightweight interventions in the existing codebase. This allowed iSono to see how different training decisions influenced false positive rates and recall, and to incorporate these learnings into their model lifecycle.

We also delivered a clear Improvement Roadmap so the team could integrate changes step by step into ATUSA’s product and AI pipeline.

Integrate

To make these improvements usable in the real world, we focused on integration:

In UX/UI, we redesigned the review interface to surface only the most relevant 3D images (those flagged by AI), with an option to expand into a multi-plane comparison mode. The redesigned Scrubber allows radiologists to navigate slices quickly and correlate findings across planes, improving interpretation speed and clarity.

In AI/ML, we built internal evaluation tools using Jupyter notebooks and prototyped lightweight interventions in the existing codebase. This allowed iSono to see how different training decisions influenced false positive rates and recall, and to incorporate these learnings into their model lifecycle.

We also delivered a clear Improvement Roadmap so the team could integrate changes step by step into ATUSA’s product and AI pipeline.

Integrate

To make these improvements usable in the real world, we focused on integration:

In UX/UI, we redesigned the review interface to surface only the most relevant 3D images (those flagged by AI), with an option to expand into a multi-plane comparison mode. The redesigned Scrubber allows radiologists to navigate slices quickly and correlate findings across planes, improving interpretation speed and clarity.

In AI/ML, we built internal evaluation tools using Jupyter notebooks and prototyped lightweight interventions in the existing codebase. This allowed iSono to see how different training decisions influenced false positive rates and recall, and to incorporate these learnings into their model lifecycle.

We also delivered a clear Improvement Roadmap so the team could integrate changes step by step into ATUSA’s product and AI pipeline.

Scale

Although the project ran over just 8 weeks, we didn’t stop at a “better model now” — we aimed for a path forward.

We established a clear path for further enhancement, recommending additional hyperparameters and training strategies that could be explored to keep improving the model beyond this engagement.

By analyzing false positives from both the original iSono model and our best-performing approach, we identified artifacts in images as a key source of noise — and proposed R&D ideas such as a model that simultaneously predicts artifacts and potential lesions to better discriminate between them.

These insights gave iSono not just higher accuracy in the short term, but a structured foundation for ongoing AI/ML evolution.

Scale

Although the project ran over just 8 weeks, we didn’t stop at a “better model now” — we aimed for a path forward.

We established a clear path for further enhancement, recommending additional hyperparameters and training strategies that could be explored to keep improving the model beyond this engagement.

By analyzing false positives from both the original iSono model and our best-performing approach, we identified artifacts in images as a key source of noise — and proposed R&D ideas such as a model that simultaneously predicts artifacts and potential lesions to better discriminate between them.

These insights gave iSono not just higher accuracy in the short term, but a structured foundation for ongoing AI/ML evolution.

Scale

Although the project ran over just 8 weeks, we didn’t stop at a “better model now” — we aimed for a path forward.

We established a clear path for further enhancement, recommending additional hyperparameters and training strategies that could be explored to keep improving the model beyond this engagement.

By analyzing false positives from both the original iSono model and our best-performing approach, we identified artifacts in images as a key source of noise — and proposed R&D ideas such as a model that simultaneously predicts artifacts and potential lesions to better discriminate between them.

These insights gave iSono not just higher accuracy in the short term, but a structured foundation for ongoing AI/ML evolution.

“We're extremely pleased with the outcome of this project. The team did a fantastic job of understanding our needs and delivering a solution that meets our expectations. The user testing sessions were incredibly valuable, and we're excited to implement the design and see the impact it will have on our users. Thank you to the entire team for their hard work and dedication.”

Shadi Saberi

CEO

@

iSono Health

How we helped:

1.

Aligned Clinical Needs with AI Potential

Interviewed radiologists, surgeons, and imaging specialists to understand where AI could truly help — not hinder — their work.

2.

Prioritized UX and Model Performance

Focused on the workflow and model challenges that had the greatest impact on review time, trust, and diagnostic value.

3.

Improved Model Accuracy and Reliability

Tuned training strategies to raise accuracy from 70% to 90% and reduce false detections by 28.7%.

4.

Refined the Radiologist Experience

Designed a new interface that surfaces AI-flagged 3D images first, reducing the number of slices to review and easing cognitive load.

5.

Built Tools for Ongoing Evaluation

Delivered internal evaluation tooling and an AI technical report so iSono can continue testing and refining models.

6.

Created a Roadmap for Future AI Innovation

Recommended additional training strategies and R&D ideas — including models to detect artifacts and lesions together.

Team

Product Manager & Facilitator UX/UI Designer ML Engineer

Delivery

High-fidelity Figma prototype Improvement roadmap AI technical report Trained model with higher accuracy level

GET STARTED

Ready to make AI useful?

Ready to make AI useful?

Ready to make AI useful?

Turning bold ambition into lasting impact starts with a conversation.

Turning bold ambition into lasting impact starts with a conversation.

© 2025 Arionkoder.

All rights reserved.

Quick Actions

Jump to Value

The Foundry

Email: hello@arionkoder.com

Offices: Americas · EMEA (5 offices)

This site respects your device’s accessibility settings, including Reduce Motion, and reduces animations when that option is enabled.